Welcome to the 13th edition of Gradient Ascent. I’m Albert Azout, a prior entrepreneur and Founder/Managing Partner at Level Ventures. On a regular basis I encounter interesting scientific research, founders tackling important and difficult problems, and technologies that wow me. I am curious and passionate about machine learning, advanced computing, distributed systems, dev/data/ml-ops, deep tech, and life sciences tech. In this newsletter, I aim to share what I see, what it means, and why it’s important. I hope you enjoy my ramblings!

Is there an emerging VC fund manager or founder I should meet?

Send me a email

Want to connect?

Find me on LinkedIn

Hi all, I hope you are having an amazing holiday season! 🎉 It has been quite a while since my last post, but I hope/resolve to start posting more frequently in 2023. Over the last few months my team and I have been focused on launching Level Ventures, a AI-enabled investment firm focused on tech investing (I hope to share more about the firm in future updates).

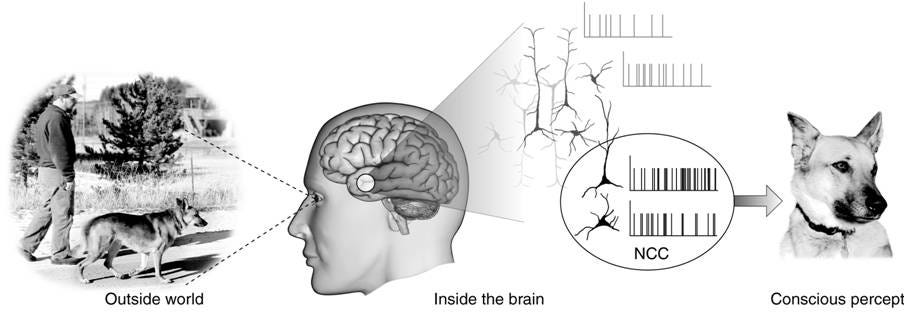

These days, I have been thinking a bit about consciousness. Since conscious experience is really all we have, understanding how mind arises from brain may be the fundamental question of human reality. In exploring the subject, I encountered several interesting concepts and ideas. One popular theory, Global Workspace Theory (GWT), proposes that the brain is divided into specialized modules, which provide specific functions, and are linked by long-distance connections. Given a particular task, via attentional selection, the contents of a specific module can be broadcast/shared among the modules. According to the theory, this module, indexed over time, is the global workspace and what constitutes our “conscious awareness”. Progress in GWT has led to ideas around the Global Neuronal Workspace (GNW)—essentially a neural realization of GWT theory. GNW has deep parallels with ideas around Neural Correlation of Consciousness (NCC) that aim to quantify relationships between mental states and neural states (the minimal set of neuronal events and mechanisms sufficient to allow for a specific conscious percept). NCC aims to identify the mechanisms of consciousness, as determined by external stimuli and percepts.

Subsequently, there have been efforts to apply GWT to deep learning networks. Yoshua Bengio provides such an approach in his paper The Consciousness Prior. Bengio represents the output, or learned representation, of a deep network as an unconscious representation state. Of course, to be useful, a learned representation should disentangle abstract explanatory factors, which uncover latent concepts within the data. Consciousness, in this particular model, is described as a low-dimensional representation of this unconscious representation state, which, when combined with a memory structure (memory at time t), the previous conscious state, and random input, serve as the core consciousness process, C. C is essentially the elements or concepts upon which attention gets focused.

However, these approaches seem somewhat unsatisfying—a theory of consciousness should include more than the mind’s attentional awareness. For instance, a theory should articulate what is generally considered to be conscious (a baby, a parrot, a single-celled organism), what is the continuum of consciousness, what physical substrates can evoke consciousness (e.g. a computer), etc.

Recently, I read Christopher Koch’s book The Feeling of Life Itself. Koch introduces Integrated Information Theory (IIT), originally the work of Giulio Tononi. IIT aims to tackle the “hard problem of consciousness”, by accepting, as evidence, the existence of consciousness (as oppose to starting bottoms-up from mechanistic/physical properties of a conscious system) and reasons about the properties that a physical substrate would require in order realize consciousness. The theory makes a conceptual leap from phenomenology to mechanism in that the properties of the physical system must be constrained by the properties of the experience—there is a one-to-one identity between consciousness and the physical system. IIT introduces several axioms which are the essential and complete properties of conscious experience.

The axioms of IIT are as follows:

Existence - consciousness exists from a purely internal and subjective perspective. This is taken as a given—”I think therefore I am”.

Composition - Consciousness is structured: each experience is composed of multiple phenomenological distinctions (i.e. I am seeing a particular view from a particular window).

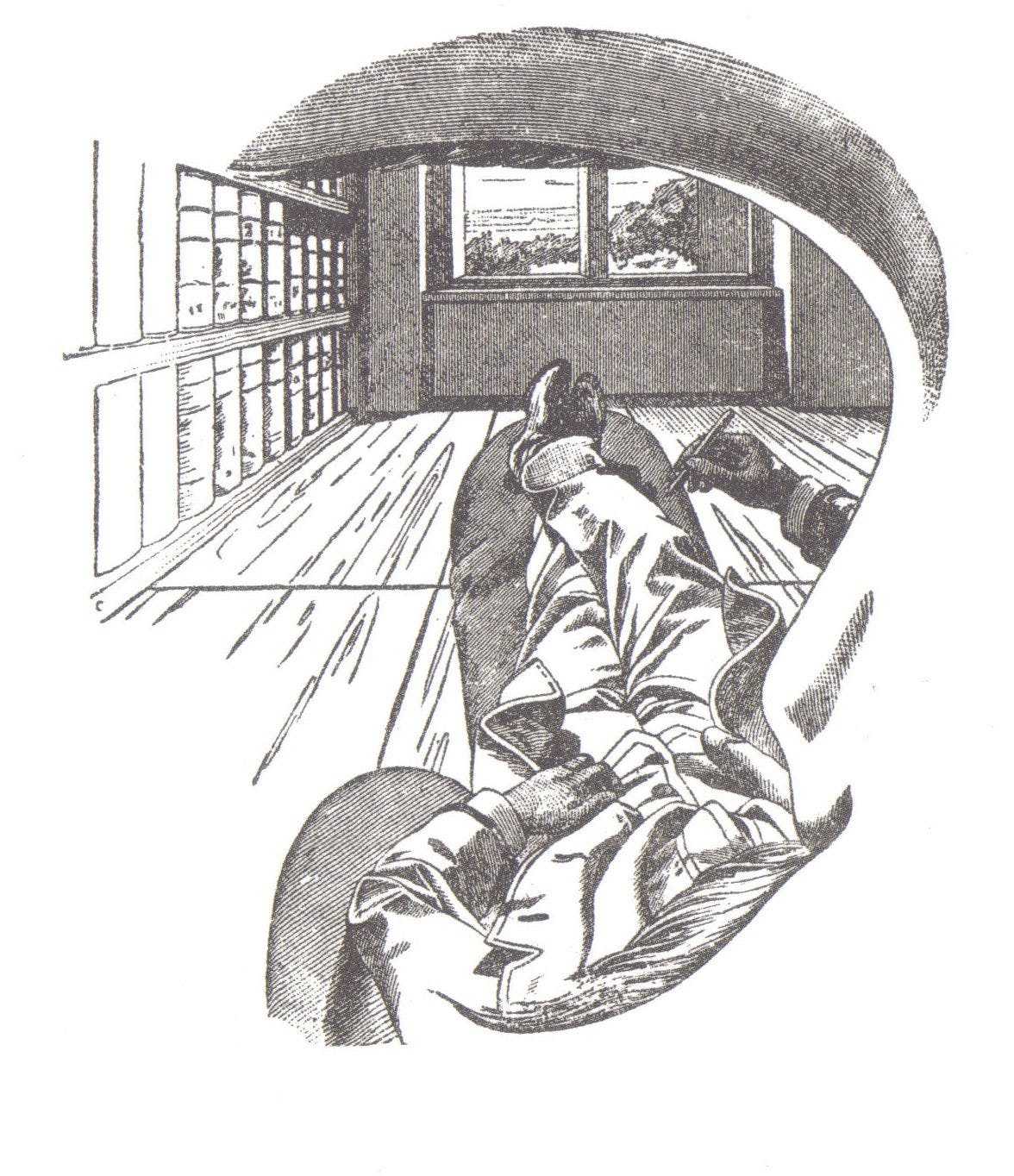

Information - Consciousness is specific: each experience is the particular way it is—being composed of a specific set of specific phenomenal distinctions— differing from other possible experiences (differentiation). My office has a particular spatial configuration—the couch is here, the chair is here, my legs are crossed just so—to the exclusion of other possible configurations.

Integration - Consciousness is unified: each experience is irreducible and cannot be subdivided into non-interdependent, disjoint subsets of phenomenal distinctions (i.e. in my hand is a black pen, not a pen plus the color black without the pen).

Exclusion - Consciousness is definite, in content and spatiotemporal resolution: each experience has the set of phenomenal distinctions it has, neither less (a subset) nor more (a superset), and it flows at the speed it flows, neither faster nor slower For instance, I am seeing the room with chair and bookshelf, but I am not seeing an experience with more or less content (e.g. I am also see my dog, and my palms feel sweaty). In addition, I am experiencing the world at a particular temporal resolution (e.g. 100 milliseconds not 1 hour experience blocks).

The axioms above are meant to completely describe the experience of consciousness. IIT attempts to explain how these axioms might be realized in physical systems (for instance, a neural network or a set of logic gates), in the form of postulates (which are pretty dense). The key idea of IIT, however, is that these properties of consciousness can be explained by a causal system—a conscious system must exist from its own intrinsic perspective, independent of external observers. That is, a conscious system is a system of elements in a state which has cause-effect power upon itself. In addition, the theory also proposes measurements that quantify the extent of the internal causality of the system (called the maximally irreducible conceptual structure, or MICS, with the resulting quantity of integrated information being phi, Φ). Φ is obtained by minimizing, over all subdivisions of a physical system into two parts A and B, some measure of the mutual information between A’s outputs and B’s inputs and vice versa. As Scott Aronson argues:

One immediate consequence of any definition like this is that all sorts of simple physical systems (a thermostat, a photodiode, etc.) will turn out to have small but nonzero Φ values.

As such, IIT consciousness can be realized by any system with intrinsic causal information flow (i.e. integrated information). Many types of physical systems can have the qualities of consciousness (i.e. a thermostat, to a small degree). Additionally, a real-world social communication network, constructed from causal flow of information between individuals, can, in some way, exhibit consciousness. In this case, network participants act as mechanisms, whose prior and future states are impacted by the network topology. Whether or not IIT encapsulates the full scope of consciousness or not, it definitely says something about how the transformation of information and causal reflexivity are related to intelligence. Perhaps when combined with attentional selection/awareness and our mind’s ability to drive its own thoughts we get closer to unlocking the mystery of mind from brain 🧠.

NON-SEQUITERS

I recommend Vaclav Smil’s new book How the World Really Works: