Welcome to the 2nd edition of Gradient Ascent. I’m Albert Azout, a prior entrepreneur and current Partner at Cota Capital. On a regular basis I encounter interesting scientific research, startups tackling important and difficult problems, and technologies that wow me. I am curious and passionate about machine learning, advanced computing, distributed systems, and dev/data/ml-ops. In this newsletter, I aim to share what I see, what it means, and why it’s important. I hope you enjoy my ramblings!

Is there a founder I should meet?

Send me a note at albert@cotacapital.com

Want to connect?

Find me on LinkedIn, Angelist, Twitter

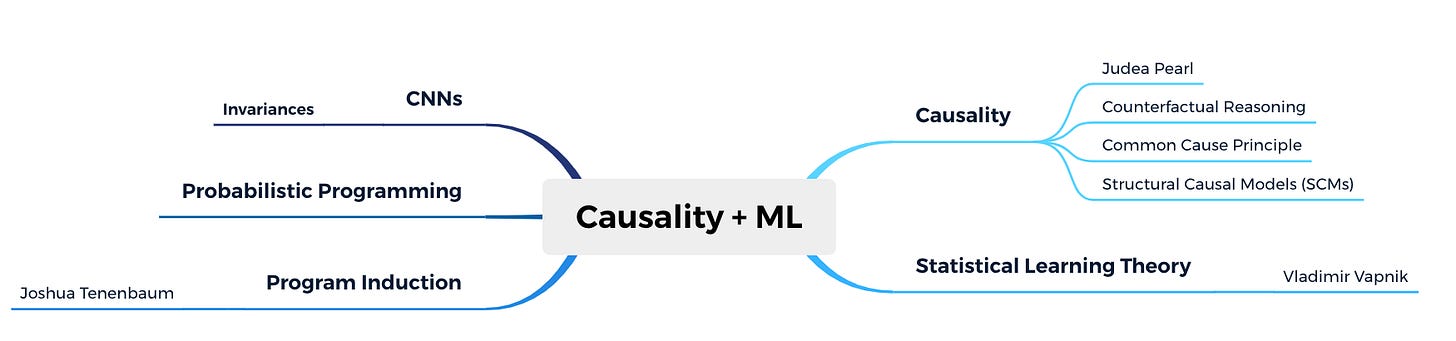

And today’s concept cloud…*

Do our brains learn programs?

I’ve been thinking about Judea Pearl’s work on causality (if you haven’t, definitely read The Book of Why and watch Lex Fridman’s amazing interview below).

Our human mind (by random mutation and selectivity over millions of years) has co-evolved with the physical world. As an example, our perceptual systems have evolved to process the visual data we see in the physical world. Inspired by the brain’s neural architecture, convolutional nets exploit various geometric symmetries/invariances when detecting objects (translational, rotational, viewpoint invariance, etc), to suit the various objects we encounter.

In the neocortex, we have evolved faculties for imagination and abstract reasoning. We imagine the future, explore actions and interventions, and predict and estimate possible outcomes. In imagining, we build causal models of our world, and we interrogate these models with interventional and counterfactual questions (see picture below).

This is how we plan. How we strategize. How we survive.

[source: Causal Hierarchy, The Book of Why]

Judea Pearl argues that to build systems with human intelligence, we need to model cause-effect relationships. Today’s machine learning systems are on the first level of Pearl’s Causal Hierarchy—Association. These systems are model-free, learning purely statistical relationships from observed data. Understandably, the trends that support these systems are: (1) massive amounts of data, (2) high capacity ML models, (3) and high-performance computing. High capacity models require lots of data to train and high performance computing is a must for optimizing large-scale model parameters [see Causality for Machine Learning].

For machine learning to move from Association to Intervention and then to Counterfactuals, machines must not only observe data but also learn adaptable models of the world.

We see model building as the hallmark of human-level learning, or explaining observed data through the construction of causal models of the world [source: Building Machines That Learn and Think Like People].

Some thoughts on this:

The drivers of such causal, model-based systems will not/cannot be the amount of training data (after all, humans do not need many data examples to learn) or perhaps even the extent of our computational resources, but something entirely different.

The components will possibly include: (a) generative approaches that can simulate data observations in a directed way, and (b) frameworks for quickly learning and adapting models from more simplistic components.

Joshua Tenenbaum has produced some interesting research around program induction, which I think is very interesting and relevant. The idea around program induction is to construct programs that compute some desired function, where the the function is specified by training data with input-output pairs. In the case of probabilistic programs (which are generative), we define a set of allowable programs and learning searches for programs that likely generated the data. These programs learn with little data and generalize to unseen environments (read Building Machines That Learn and Think Like People).

I hope to dig into these topics a bit more in a future edition.

Please do send me any interesting research you encounter. 🧠

Stay safe everyone!

*When researching topic areas I have been using a neat mind mapping software called XMind. I will include a concept cloud in all my posts.

Disclosures

While the author of this publication is a Partner with Cota Capital Management, LLC (“Cota Capital”), the views expressed are those of the writer author alone, and do not necessarily reflect the views of Cota Capital or any of its affiliates. Certain information presented herein has been provided by, or obtained from, third party sources. The author strives to be accurate, but neither the author nor Cota Capital do not guarantees the accuracy or completeness of any information.

You should not construe any of the information in this publication as investment advice. Cota Capital and the author are not acting as investment advisers or otherwise making any recommendation to invest in any security. Under no circumstances should this publication be construed as an offer soliciting the purchase or sale of any security or interest in any pooled investment vehicle managed by Cota Capital. This publication is not directed to any investors or potential investors, and does not constitute an offer to sell — or a solicitation of an offer to buy — any securities, and may not be used or relied upon in evaluating the merits of any investment.

The publication may include forward-looking information or predictions about future events, such as technological trends. Such statements are not guarantees of future results and are subject to certain risks, uncertainties and assumptions that are difficult to predict. The information herein will become stale over time. Cota Capital and the author are not obligated to revise or update any statements herein for any reason or to notify you of any such change, revision or update.